OpenAI, the company behind the popular ChatGPT, has announced the launch of its new language model, GPT-4o[1][2][3]. The “o” in GPT-4o stands for “omni,” signifying the model’s ability to handle text, speech, and video[2]. This new model is an improvement over its predecessor, GPT-4 Turbo, offering enhanced capabilities, faster processing, and cost savings for users[1].

GPT-4o is set to power OpenAI’s ChatGPT chatbot and API, enabling developers to utilize the model’s capabilities[1]. The new model is available to both free and paid users, with some features rolling out immediately and others in the following weeks[1].

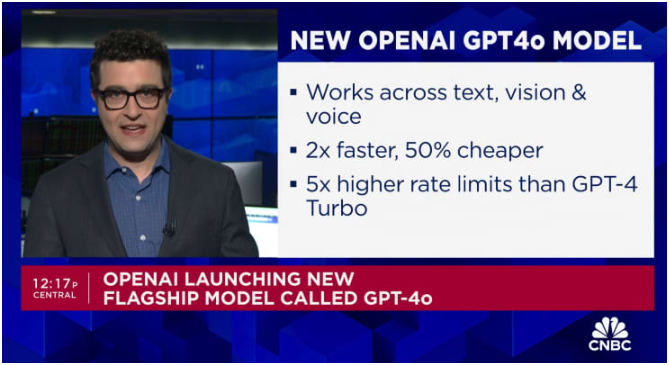

The new model brings a significant improvement in processing speed, a 50% reduction in cost, five times higher rate limits, and support for over 50 languages[1]. OpenAI plans to gradually roll out the new model to ChatGPT Plus and Team users, with enterprise availability “coming soon.” The company also began rolling out the new model to ChatGPT Free users, albeit with usage limits, on Monday[1].

In the upcoming weeks, OpenAI will introduce improved voice and video features for ChatGPT[1]. The voice capabilities of ChatGPT may intensify competition with other voice assistants, such as Apple’s Siri, Alphabet’s Google, and Amazon’s Alexa[1]. Users can now interrupt ChatGPT during requests to simulate a more natural conversation[1].

GPT-4o greatly improves the experience in OpenAI’s AI-powered chatbot, ChatGPT[2]. The platform has long offered a voice mode that transcribes the chatbot’s responses using a text-to-speech model, but GPT-4o supercharges this, allowing users to interact with ChatGPT more like an assistant[2]. The model delivers “real-time” responsiveness and can even pick up on nuances in a user’s voice, in response generating voices in “a range of different emotive styles” (including singing)[2].

GPT-4o also upgrades ChatGPT’s vision capabilities[2]. Given a photo — or a desktop screen — ChatGPT can now quickly answer related questions, from topics ranging from “What’s going on in this software code?” to “What brand of shirt is this person wearing?”[2]. These features will evolve further in the future, with the model potentially allowing ChatGPT to, for instance, “watch” a live sports game and explain the rules[2].

GPT-4o is more multilingual as well, with enhanced performance in around 50 languages[2]. And in OpenAI’s API and Microsoft’s Azure OpenAI Service, GPT-4o is twice as fast as, half the price of and has higher rate limits than GPT-4 Turbo[2].

During the demonstration, GPT-4o showed it could understand users’ emotions by listening to their breathing[3]. When it noticed a user was stressed, it offered advice to help them relax[3]. The model also showed it could converse in multiple languages, translating and answering questions automatically[3].

OpenAI’s announcements show just how quickly the world of AI is advancing[3]. The improvements in the models and the speed at which they work, along with the ability to bring multi-modal capabilities together into one omni-modal interface, are set to change how people interact with these tools[3].

Discover more AI News here.

Citations:

[1] https://www.investopedia.com/microsoft-backed-openai-unveils-most-capable-ai-model-gpt-4o-8647639

[2] https://techcrunch.com/2024/05/13/openais-newest-model-is-gpt-4o/

[3] https://www.pymnts.com/artificial-intelligence-2/2024/openai-unveils-gpt-4o-promising-faster-performance-and-enhanced-capabilities/